News

Preferred Networks released ChainerMN, a multi-node extension to Chainer, an open source framework for deep learning

2017.05.09

Tokyo, Japan, 9 May 2017 –

Today, Preferred Networks, Inc. (Headquarters: Chiyoda-ku, Tokyo, President and CEO: Toru Nishikawa, hereinafter PFN) released ChainerMN (MN stands for Multi-Node, https://github.com/pfnet/chainermn), which can accelerate the training speed by adding a distributed learning function with multiple GPUs to Chainer, the open source deep learning framework developed by PFN.

Even though the performance of GPUs is continuously improving, the ever-increasing complexity of neural network models, with large number of parameters and much larger training datasets requires more and more computational power to train these models. Today, it is common that one training session takes more than a week on a single node of a state-of-the-art computer.

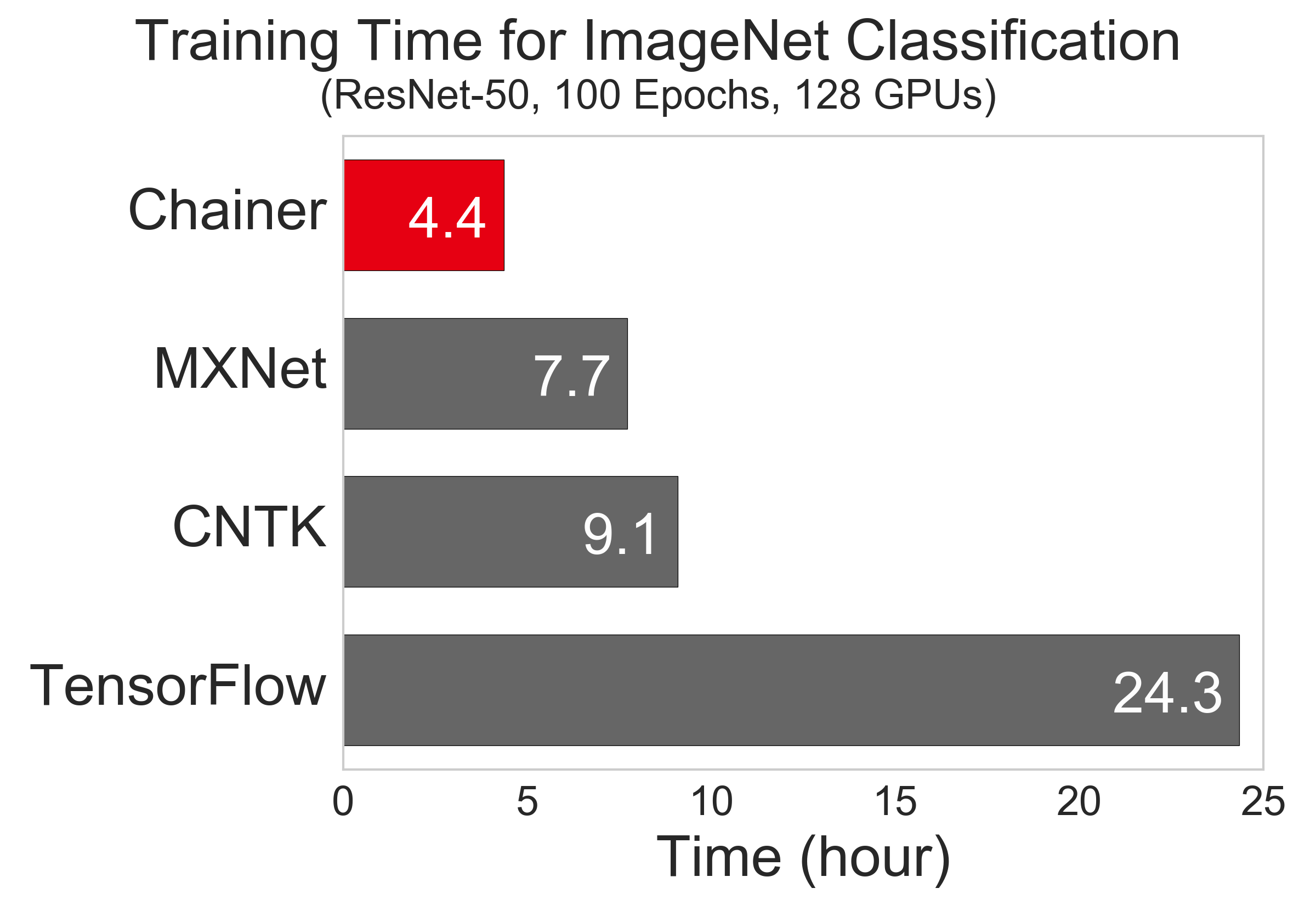

Aiming to provide researchers with an efficient way to conduct flexible trial and error iterations, while using large training data sets PFN developed ChainerMN, a multi-node extension for high-performance distributed training, built on top of Chainer. We demonstrated that ChainerMN finished training a model in about 4.4 hours with 32 nodes and 128 GPUs which would require about 20 days on a single-node, single GPU machine.

Performance comparison experiment between ChainerMN and other frameworks

https://research.preferred.jp/2017/02/chainermn-benchmark-results/

We compared the performance benchmark result of ChainerMN with those of other popular multi-node frameworks. In our 128-node experiments with a practical setting, in which the accuracy is not sacrificed too much for speed, ChainerMN outperformed other frameworks.

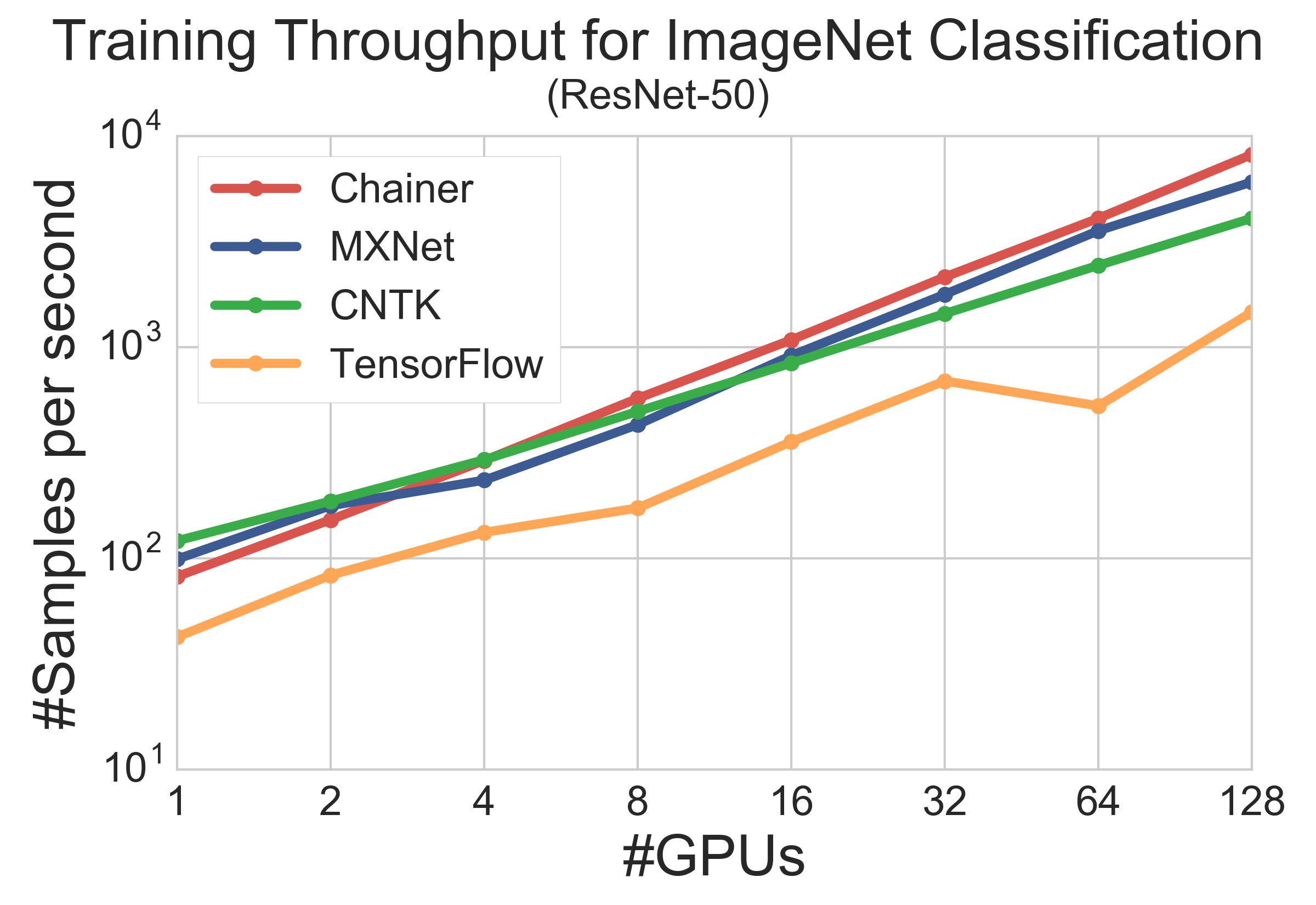

When comparing the scalability, although the single-GPU throughputs of MXNet and CNTK (both are written in C++) are higher than ChainerMN (written in Python), we found that the throughput of ChainerMN was the highest with 128 GPUs, showing that ChainerMN is the most scalable. This result was due to the design of ChainerMN which is optimized for both intra-node and inter-node communications.

Existing Chainer users can easily benefit from the performance and scalability of ChainerMN simply by changing a few lines of their original training code.

ChainerMN has already been used in multiple projects in a variety of fields such as natural language processing and reinforcement learning.

About the open source deep learning framework Chainer

Chainer is a Python-based deep learning framework developed by PFN, which has unique features and powerful performance that enables users to easily and intuitively design complex neural networks thanks to its “Define-by-Run” feature. Since it was open-sourced in June 2015, as one of the most popular frameworks, Chainer has attracted not only the academic community but also many industrial users who need a flexible framework to harness the power of deep learning in their research and real-world applications. (http://chainer.org/)

About Preferred Networks, Inc.

Founded in March 2014 with the aim of business utilization of deep learning technology focused on IoT. Edge Heavy Computing handles the enormous amount of data generated by devices in a distributed and collaborative manner at the edge of the network and realizes innovation in the three priority business areas of the transportation system, manufacturing industry, and bio- healthcare.

PFN develops and provides solutions based on the Deep Intelligence in-Motion (DIMo, Daimo) platform that provides state-of-the-art deep learning technology. Collaborating with world leading organizations, such as Toyota Motor Corporation, Fanuc Inc., National Cancer Research Center, we are promoting advanced initiatives.(https://www.preferred.jp/en/)

*Chainer(R) and DIMo(TM) are a trademark of Preferred Networks, Inc. in Japan and other countries.