PFN Begins Development of Generative AI Processor MN-Core L1000

Energy-efficient MN-Core architecture and 3D-stacked distributed memory for up to ten-fold increase in generative AI inference speed

2024.11.15

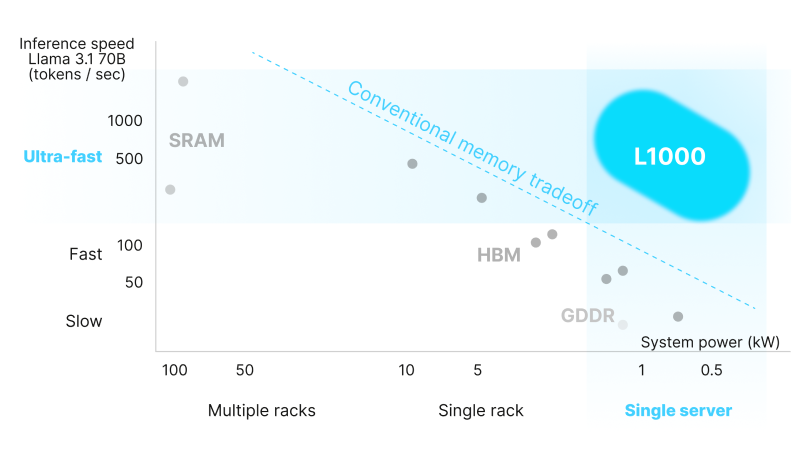

TOKYO – November 15, 2024 – Preferred Networks, Inc. (PFN) today announced that it has begun developing MN-Core™ L1000, PFN’s new processor specifically designed for generative AI including large language models in 2026. PFN plans to market L1000 as the latest product in its proprietary MN-Core series of AI processors. Optimized for generative AI inference, PFN expects L1000 to achieve up to a ten-fold increase in computing speed compared with conventional processors such as graphic processing units (GPUs).

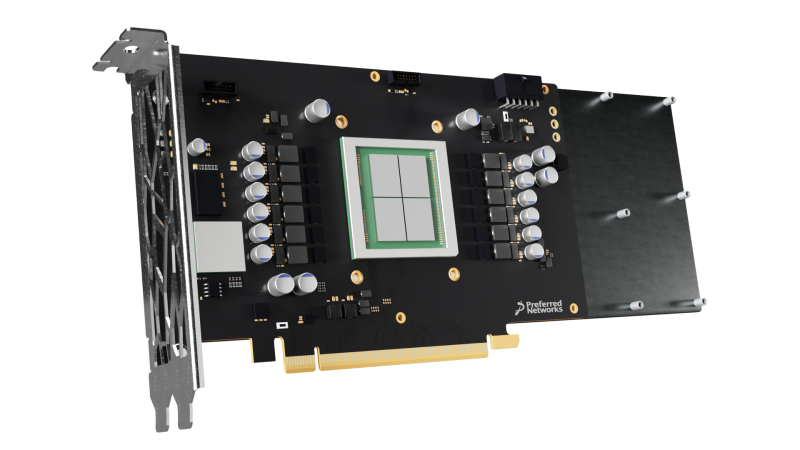

MN-Core™ L1000 for generative AI (concept rendering)

Numerous GPUs and high-performance processors are used today to develop generative AI models as the training process requires a vast amount of computing resources, data and parameters. On the other hand, running a generative AI model, a process known as inference, does not require as much computing resources as the training process. Generative AI inference, however, requires the processor to read hundreds of gigabytes of data every time it generates each unit of text, images and other forms of data. This makes conventional processors’ narrow memory bandwidth a major bottleneck for the processing time required for running generative AI. To address this limitation, PFN is building L1000 in a way it can transfer data between memory (data storage) and logic (arithmetic units) at a high speed with low power consumption during the inference phase of generative AI models.

Major characteristics of MN-Core L1000 processor for generative AI

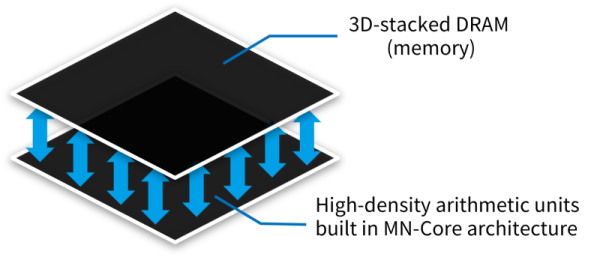

L1000 combines the proven architecture of the MN-Core series, which PFN has been developing since 2016, and the latest 3D-stacking technology.

1. 3D-stacked dynamic random access memory (DRAM)

L1000 features a 3D stacked architecture in which its memory is layered on top of the logic, resulting in a wider memory band than high bandwidth memory (HBM) which is commonly equipped in high-end GPUs. L1000 also uses DRAM, a more high-volume and affordable option than SRAM (static random access memory) which is increasingly adopted for use in AI processors.

2. MN-Core architecture

The MN-Core Series’ architecture is characterized by arithmetic units densely mounted on the chip and distributed memory, both controlled by the software to minimize power consumption and exhaust heat. The architecture demonstrated its energy efficiency when MN-3, PFN’s supercomputer powered by the first-generation MN-Core, topped the Green500 list three times in 2020 and 2021. The architecture’s energy efficiency also alleviates the heat due to the 3D-stacked memory, which is a technical challenge the technology is facing.

By combining the two technologies, PFN expects that L1000 can achieve both a maximum of ten-fold increase in speed and significant decrease in power consumption for generative AI inference compared with GPU and other conventional processors.

Increased speed will reduce the computing cost while the generative AI model is in use, and enable users to run generative AI models in on-premise environments or embed them in software applications. PFN will continue facilitating the use of generative AI in business and everyday life from both hardware and software sides including development of PLaMo™︎, its foundation model with high Japanese-language performance.

About MN-Core series of AI processors

Jointly developed by PFN and Kobe University, the MN-Core™ series of processors are optimized for matrix operations that are essential for deep learning. To maximize the number of arithmetic units on the chip, other functions such as network control circuits, cache controllers and command schedulers, are incorporated in the compiler software rather than the hardware, achieving highly efficient deep learning operations while keeping the costs down. MN-3, PFN’s supercomputer powered by MN-Core, has topped the Green500 list of the world’s most energy-efficient supercomputers three times between June 2020 and November 2021. MN-Core 2, the second generation in the series, started operating in 2023. In September 2024, PFN launched MN-Server 2 powered by eight MN-Core 2 chips and MN-Core 2 Devkit workstation powered by one MN-Core 2 chip. PFN also launched Preferred Computing Platform™ (PFCP™︎), a cloud-based service that gives users access to the computing power of MN-Server 2.